Victims in Court: AI-Generated Testimony and the Future of Justice

In a groundbreaking legal moment, the voice of a murdered man speaks to his killer through artificial intelligence, raising profound questions about authenticity, ethics, and the future of justice systems.

In an Arizona courtroom this May, something unprecedented happened: Christopher Pelkey, a man killed in a 2021 road rage incident, seemingly "spoke" directly to his killer. Through AI-generated video and audio, Pelkey delivered a victim impact statement that moved the judge to increase the sentence of Gabriel Horcasitas, the man who shot him.

"To Gabriel Horcasitas, the man who shot me: It is a shame we encountered each other that day in those circumstances," the digital recreation of Pelkey said. "In another life, we probably could've been friends. I believe in forgiveness and in God who forgives. I always have and I still do."

This remarkable moment represents a significant milestone in justice systems - the first known instance of an AI-generated "deepfake" delivering a victim impact statement in court. As we navigate this technological frontier, it's critical that we examine the issues related to generated content in legal proceedings.

The Blurred Line Between Authentic and Synthetic

The Pelkey case forces us to confront a fundamental question: What happens when AI recreations have the opportunity to influence a judge or jury? We have no way of knowing whether the digital Pelkey's words of forgiveness accurately represent what the real Christopher Pelkey would have said had he lived. This uncertainty creates a troubling evidentiary gap that legal systems have not yet adequately addressed.

The AI simulation wasn't perfect, but it was enough to trigger profound emotional responses. Maricopa County Superior Court Judge Todd Lang was visibly moved, telling the family, "I love that AI. Thank you for that. I loved the beauty in what Christopher (said)… I felt like that was genuine." This response suggests the persuasive power that these technologies can wield in emotionally charged settings like sentencing hearings.

Arizona's Chief Justice Ann Timmer acknowledged this double-edged sword in a statement, noting that while AI offers potential benefits in the justice system, it could also "hinder or even upend justice if inappropriately used." This caution is well-founded as we consider the potential for manipulating legal outcomes through emotionally compelling AI-generated content.

Family Authorship and Ethical Complexities

What makes this case especially nuanced is that Pelkey's family themselves created the AI representation. His sister, Stacey Wales, worked with her husband and a friend experienced in AI technology to develop what she described as "a Frankenstein of love." For Wales and her family, this digital resurrection provided a meaningful way to include her brother's voice among the 49 impact statements collected for the sentencing.

"It was important not to make Chris say what I was feeling, and to detach and let him speak - because he said things that would never come out of my mouth, but I know would come out of his," Wales explained. This deliberate effort to separate her own feelings from what she believed her brother would say demonstrates the care and intentionality behind the creation.

The question of authorship in these cases carries some ethical weight. When families create these experiences out of love and genuine desire to represent their lost loved ones, should they have greater ethical latitude than commercial or governmental actors might? Who are we to say this approach wasn't appropriate for the Pelkey family? Perhaps they felt that this was precisely the most fitting way to honour Christopher's memory and ensure his perspective was represented at the sentencing of his killer. Does this matter? Or should this never be allowed because of the significance of the setting and the potential influence of the experience.

A Blend of Authentic and Synthetic Media

One of the most interesting aspects of the Pelkey case is that the AI synthetic presentation wasn't the only view of Christopher Pelkey that was seen in court. The synthetic version introduced a clip of the ‘real’ Christopher, who strongly articulated his perspective on life and faith.

This inclusion of authentic media was compelling and demonstrated the real human life. The incorporation of genuine elements grounds the synthetic creation, potentially making the whole experience more persuasive and emotionally resonant. We certainly get a more complete picture of who Christopher Pelkey was through these authentic elements, even as they're incorporated into a wider artificial construct.

This hybridization points to an emerging pattern where the boundaries between real and synthetic media become increasingly blurred. As these technologies advance, determining what constitutes authentic evidence will become more challenging for our legal system.

Legal Frameworks and Regulatory Horizons

The Pelkey case emerges at a time when governments worldwide are scrambling to develop frameworks for regulating deepfakes and AI-generated content. While much of this legislation focuses on political deepfakes and non-consensual sexual content, the use of this technology in courtrooms presents unique challenges that require specific consideration.

Currently, I don’t believe there is any comprehensive federal legislation in the United States specifically addressing deepfakes in legal proceedings. However, multiple bills are under consideration that could impact how such technologies are used in courtrooms.

States have been more proactive, with Arizona forming a committee to explore guidelines for AI use in courtrooms. Several states have enacted laws addressing non-consensual sexual deepfakes, and/or have passed legislation limiting deepfake use in political campaigns. Tennessee's Ensuring Likeness Voice and Image Security (ELVIS) Act specifically protects individuals' voices from unauthorised AI replication.

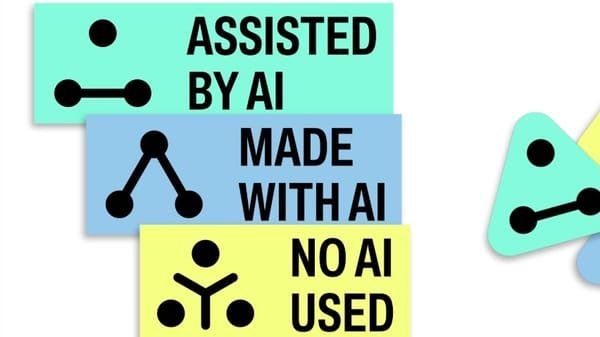

The European Union has taken a more comprehensive approach with its Artificial Intelligence Act, which entered into force in 2024. The Act defines a "deepfake" as "AI-generated or manipulated image, audio or video content that resembles existing persons, objects, places, entities or events and would falsely appear to a person to be authentic or truthful." Rather than prohibiting deepfakes entirely, the EU has opted for transparency requirements - mandating that content generated or modified with AI must be clearly labeled as such.

This approach is particularly relevant to courtroom settings, where the EU regulations would require that any AI-generated media be explicitly identified - if it was allowed at all as a victim statement, which I suspect would be unlikely. The legislation implements a dual system of technical marking by providers and clear labelling by users, ensuring that AI-generated media can be readily identified. This transparency-focused approach is not out-of-keeping with how the Pelkey case was handled, with the AI simulation explicitly identifying itself as such at the beginning of the presentation and subsequently including authentic footage.

As these regulatory frameworks continue to develop globally, courts must establish clear guidelines for how AI-generated content can be used in legal proceedings if at all. These guidelines should address questions of authentication, consent, disclosure requirements, and the appropriate weight to give such content.

Critical Questions for the Future

As we consider the implications of the Pelkey case and the broader trend of AI entering courtrooms, several pressing questions emerge:

- Authentication Standards: How can courts verify the authenticity and representativeness of testimony? What standards should be applied to ensure such content doesn't misrepresent?

- Consent and Agency: Who has the right to create and present AI versions of deceased individuals? Should prior consent be required, or can next of kin make these decisions? Should these creations be allowed at all?

- Disclosure Requirements: Are there disclosures re AI generated content legally mandated in a court setting? In the Pelkey case, the AI creation explicitly identified itself as such at the beginning of the video, but it is not clear whether this was legally required, even if it seems obviously that it should be.

- Weight: If generated content is allowed under certain circumstances (and that is an IF!), how do we ensure limitations on how such content can influence sentencing decisions?

- Technological Advancements: As AI technology improves, making deepfakes increasingly indistinguishable from authentic media, how will our legal system adapt to maintain the integrity of evidence?

- Potential for Abuse: How can we prevent malicious actors from using this technology to manipulate legal outcomes? Could the emotional impact of seeing a deceased victim "speak" unduly prejudice a jury?

Finding the Balance

The Pelkey case reveals the perils and complexity of AI-generated content in legal proceedings. On one hand, it provided a grieving family with a meaningful way to include their lost loved one in an important legal process. On the other, it raises serious concerns about evidence integrity, emotional manipulation, and the boundaries of acceptable technological intervention in justice systems.

As we move forward, we must find a balance that embraces technological innovation in areas where it is clearly beneficial (e.g. democratising access to legal support), while preserving the foundational principles of justice. This balance requires thoughtful regulation, clear guidelines for courts, improved authentication technologies, and ongoing ethical dialogue about the appropriate use of AI in legal settings.

The digital resurrection of Christopher Pelkey represents just the beginning of what will likely become an increasingly common ethical issues. How we respond to these early cases will shape the future relationship between artificial intelligence and justice. By approaching these issues with both technological openness and ethical rigor, we can harness the potential of AI while safeguarding the integrity of our legal systems.

In this new future where the deceased can seemingly speak through technology, we must ensure that truth, consent, and justice remain some of our guiding principles when it comes to the law. The voice from beyond the grave may be artificial, but its impact on our legal system and society is profoundly real.