The Disclosure Dilemma: What Happens When We Know We're Talking to a Bot

How AI transparency requirements are reshaping human-machine interaction

In April 2025, Reddit erupted in controversy when researchers from the University of Zurich were discovered to have deployed AI bots that posed as real people in the popular r/changemyview forum. The bots had generated over 1,000 comments, taking on identities (including a rape victim), all without users' knowledge or consent. Reddit's response was swift and uncompromising: the company announced it was pursuing legal action, calling the experiment deeply wrong on both a moral and legal level.

This incident highlights a critical question facing the AI industry: Should users always know when they're interacting with artificial intelligence? As AI systems become increasingly sophisticated, it will not always be easy to tell what is on the other side of the digital wall, particularly with text-only mediums. Meanwhile, regulators worldwide are implementing disclosure requirements that will fundamentally change how we interact with AI, potentially in ways that neither lawmakers nor technologists fully anticipate. One thing is for certain, we don’t yet know enough about how disclosure/non-disclosure affects human behaviour, but these issues are, and will be, affecting how people, communities and organisations develop.

Mandatory Transparency on the Horizon

European Union: The AI Act's Disclosure Requirements

The European Union has taken the lead in regulating AI disclosure through the AI Act, which came into force in August 2024. Starting in August 2026, the legislation will require clear disclosure when users interact with AI systems.

Under Article 50 of the AI Act, AI providers must inform users when they interact with AI "unless this is obvious" that they're dealing with a machine. This applies to various AI applications including AI systems that are intended to interact directly with “natural persons” via text, voice, avatar etc. Generated deepfakes ("AI-generated or manipulated image, audio or video content that resembles existing persons, objects, places, entities or events and would falsely appear to a person to be authentic or truthful" (Art. 3(60) EU AI Act) will need to be labeled as artificially generated.

The law mandates that disclosure must be more than mere implication. For instance, customer service chatbots on e-commerce platforms must explicitly state something akin to: "You are now chatting with an AI assistant." The European Commission's guidance emphasises that this transparency is necessary to preserve trust and allow users to make informed decisions.

Non-compliance carries substantial penalties. Violations can result in administrative fines of a maximum of €35 million or 7% of a company's global annual revenue, whichever is higher. Even smaller infractions, such as providing incomplete information to authorities, can lead to fines of up to €7.5 million or 1% of global turnover, whichever is higher. (For SMEs and start-ups, the percentages are the same, but it is whichever is lower.)

United States: A Patchwork of State-Level Initiatives

The United States has a more diverse approach to AI disclosure regulation due to the state structure and President Trump’s rollback of President Biden’s AI Executive Order. While there is no comprehensive federal AI disclosure law as yet, several initiatives are emerging at the state level. These have significant differences, and cover different areas of AI :

California's Bot Disclosure Law requires businesses to disclose when customers are interacting with AI in certain commercial contexts, though enforcement has been limited.

New York City now requires employers using AI systems in hiring or promotion processes to inform job applicants and employees of such use, representing one of the first sector-specific disclosure mandates. A bill currently at committee stage “establishes comprehensive liability regulations for chatbots in New York, requiring proprietors to be legally responsible for misleading, incorrect, or harmful information provided by their AI systems”.

Florida requires that certain political advertisements, electioneering communications, or other political content, if created by generative AI, need a disclaimer: “Created in whole or in part with the use of generative artificial intelligence (AI).” This disclaimer must be printed clearly, be readable, and occupy at least 4 percent of the communication based on the type of media.

Tennessee ELVIS Act (2024): First state law targeting AI deepfakes and voice cloning, protecting artists' rights.

Several states are considering additional disclosure requirements, particularly around automated processing of personal information and AI use in government services.

Industry Self-Regulation and International Trends

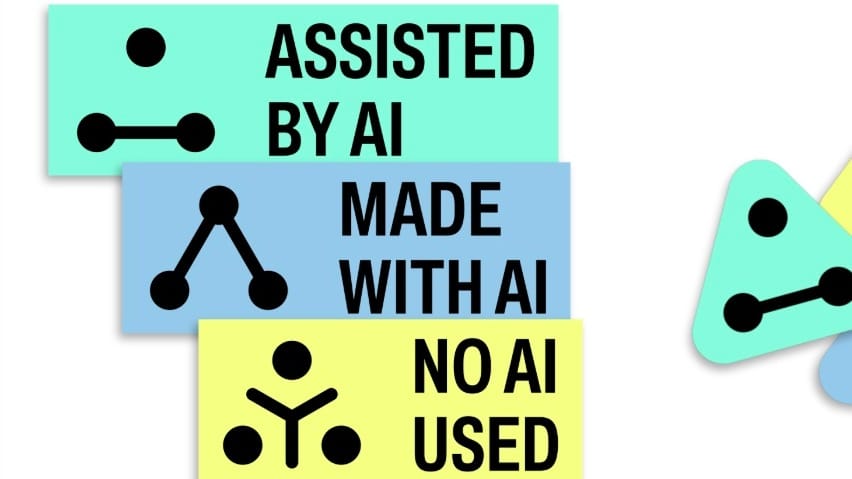

Beyond formal legislation, industry initiatives are emerging. The Content Authenticity Initiative, with over 3,000 members, is working to establish Content Credentials as a digital industry standard for provenance labelling.

Other countries are watching the EU's approach closely. Canada, Australia, and several other nations are developing their own AI governance frameworks, many of which include disclosure requirements.

Human Behaviour: Does Disclosure Change Everything?

While disclosure requirements may seem straightforward from a regulatory perspective, knowing you're talking to a bot might fundamentally alter human behaviour in complex and often counterintuitive ways.

The Trust Penalty

One finding across some studies is what researchers call the "AI disclosure effect" - a reduction in trust when AI use is revealed. A 2019 field experiment by Luo et al. found that disclosing chatbot identity at the start of conversations reduced purchase rates. Customers perceived disclosed bots as less knowledgeable and less empathetic than humans, leading to more curt interactions.

This pattern holds across separate experiments documented in recent research, consistently showing that actors who disclose AI usage are trusted less than those who don't (Oliver Schilke and Martin Reimann). The effect appears to stem from reduced perceptions of legitimacy: when people know AI is involved, they begin questioning the authenticity and value of the interaction itself. This seems to be true even if the content is positively received: Osbourne and Bailey’s study “Me vs the machine” indicated that personal advice written by ChatGPT was rated more positively than human-created advice, but this effect was only observed when participants were not told the advice was generated by ChatGPT.

The Exposure vs. Self-Disclosure Effect

Particularly concerning for companies is research showing that the negative trust impact is stronger when AI usage is exposed by third parties rather than self-disclosed. This suggests that while proactive disclosure carries reputational costs, being caught using AI without disclosure creates even greater damage to trust and credibility.

The Self-Disclosure Contradiction

The relationship between disclosure and personal sharing reveals another layer of complexity. Some studies show that people actually disclose more personal information when they know they're talking to an AI, especially for sensitive topics: a study working with Veterans showed that they were more more likely to reveal symptoms of Post Traumatic Stress Disorder to an anonymised Virtual Human that builds rapport when compared to either the standardised face-to-face Post-Deployment Health Assessment (PDHA) screening or an anonymised computer version.

However, this doesn't mean that the ultimate outcomes are always positive, and these are certainly context dependent. A 2025 MIT study involving 981 participants found that while text-based AI interactions led to more emotional engagement and self-disclosure, they also resulted in worse psychosocial outcomes, including higher loneliness and emotional dependence. The researchers suggest that text interfaces allow users to project personalities onto AI systems, creating deeper but potentially unhealthy emotional attachments.

Communication Style Adaptation

When users know they're interacting with AI, they unconsciously adapt their communication style. Research on hybrid service agents - systems that combine AI with human oversight - shows that disclosing human involvement before or during an interaction with the hybrid agent leads customers to adopt a more human-oriented communication style. Studies have also shown that the emotional situation playing out can affect the amount of satisfaction for the user depending on whether the user perceives the interaction to be with a chatbot or with a real person - with the worst scenario being interacting with a chatbot in a scenario that might elicit anger. Anticipating the most appropriate use of automation and transparency around that automation might therefore be guided by the specific emotional settings anticipated.

The Complex Road Ahead

The research makes clear that AI disclosure is far from the simple transparency solution that regulators and ethicists might hope for. Mandatory disclosure addresses legitimate concerns about deception and consent. But companies also need to be aware that the very act of transparency creates a complex web of behavioural changes.

Navigating Regulatory Compliance

For companies operating internationally, the emerging patchwork of disclosure requirements creates significant compliance challenges. The EU's broad disclosure mandates will apply to any company serving European customers, while state-level requirements in the US create different/additional compliance responsibilities. Companies must develop systems capable of detecting when disclosure is required and implementing it in ways that comply with local regulations.

Systems might need to:

- Determine the appropriate level and timing of disclosure

- Adapt disclosure methods to different cultural and linguistic contexts

- Maintain disclosure records for regulatory compliance

The Design Challenge

Perhaps most challenging is the question of how to design disclosure in ways that meet legal requirements whilst taking into account the behavioural impacts. Research suggests several potential approaches:

Context-Sensitive Disclosure: Companies might consider how to adhere to transparency regulations based on the specific use case, user demographics, and interaction context. What is the likely emotional state of the user? How does this affect choices regarding how transparency is delivered and the impact of that transparency?

Graduated Disclosure: Some researchers suggest a layered approach where basic AI disclosure is provided upfront, with more detailed information available on demand.

Expectation Management: Given that negative reactions often stem from unmet expectations, systems might focus on clearly communicating AI capabilities and limitations rather than just identity. Will people have access to a real human, and if so under what circumstances?

Cultural Adaptation: Since attitudes toward AI vary significantly across regions, disclosure systems, hybrid ai/human provision, and expectation management may need to adapt to local cultural norms.

Ethical Considerations Beyond Compliance

The research raises profound questions about the ethics of AI disclosure that go beyond simple regulatory compliance. If disclosure significantly reduced the therapeutic benefits of AI counselling systems, is mandatory transparency always ethical? How do we balance respect for human autonomy, and the right to know, with the impact OF knowing.

Transparency, Innovation and Human Behaviour

As disclosure requirements take effect, the companies will need to develop systems that remain effective even when their artificial nature is known, and consider applications that balance human approaches with AI. This might drive innovation toward:

- More sophisticated AI systems that can maintain engagement despite disclosure

- Better integration of AI, more human-based but AI leveraged approaches, and human agents in hybrid systems

- Exploration of new interaction paradigms that leverage AI's unique capabilities rather than mimicking human behaviour

- Enhanced personalisation that adapts to individual user preferences about AI interaction

The companies that succeed will likely be those that view disclosure not as a barrier to overcome, but as a design constraint that drives innovation toward more transparent, trustworthy, and effective systems.

Conclusion: Embracing Complexity

The emerging research on AI disclosure reveals a landscape far more nuanced than simple transparency mandates might suggest. While the ethical arguments for disclosure are compelling - users deserve to know when they're interacting with AI - the behavioural reality is that such knowledge fundamentally changes the nature of human-AI interaction, often in ways that affect trust, engagement, and even the fundamental benefits from the experience.

As the EU AI Act's disclosure requirements take effect in 2026 and other jurisdictions develop their own frameworks, companies must prepare for a world where transparency is not optional. But they must also grapple with the complex behavioural implications that research is only just beginning to uncover.

The path forward requires acknowledgment that there are no simple solutions. Effective AI disclosure will likely require context-sensitive approaches that balance legal compliance with user experience, cultural adaptation with standards, and (sometimes) transparency with effectiveness. Companies that begin preparing now will be best positioned to tackle these issues for their specific situation as we navigate the disclosed AI future that is rapidly approaching.